Research data management with DataLad

@ IMPRS-MMFD

2 - Hands-on DataLad basics

Stephan Heunis jsheunis

jsheunis

|

Michał Szczepanik mslw

mslw

|

|

|

Psychoinformatics lab,

Institute of Neuroscience and Medicine (INM-7) Research Center Jülich |

Slides: https://psychoinformatics-de.github.io/imprs-mmfd-workshop/

💻Your turn💻

Use what you already know about how and where to get help to complete these challenges on workshop-hub.datalad.org (or on your own system):- Create dataset, add a file with the content "abc". Check the status of the dataset. Now save the dataset with a commit message. Check the status again.

- Create a different dataset *outside* the first one.

- Clone the first dataset into the second under the name "input".

- Use datalad to capture the provenance of a data transformation that converts

the content of the file created at (1) to all-uppercase and saves it in the dataset

from (2). Hint the command:

sh -c 'tr "a-z" "A-Z" < inputpath > outputpath' - Check the status of the dataset. Now let DataLad show you the change

to the dataset that running the

trcommand made.

Let's dissect that, via

A guided code-along through DataLad's Basics and internals

Code:

psychoinformatics-de.github.io/rdm-course/01-content-tracking-with-datalad/index.html

Local version control

Local version control

You created a DataLad dataset:- DataLad's core data structure

- Dataset = A directory managed by DataLad (git + git-annex)

- Any directory of your computer can be managed by DataLad (CV, website, music library, phd)

- Datasets can be created (from scratch) or installed

- Datasets can be nested: linked subdirectories

$ datalad create -c text2git my-datasetWhat is version control?

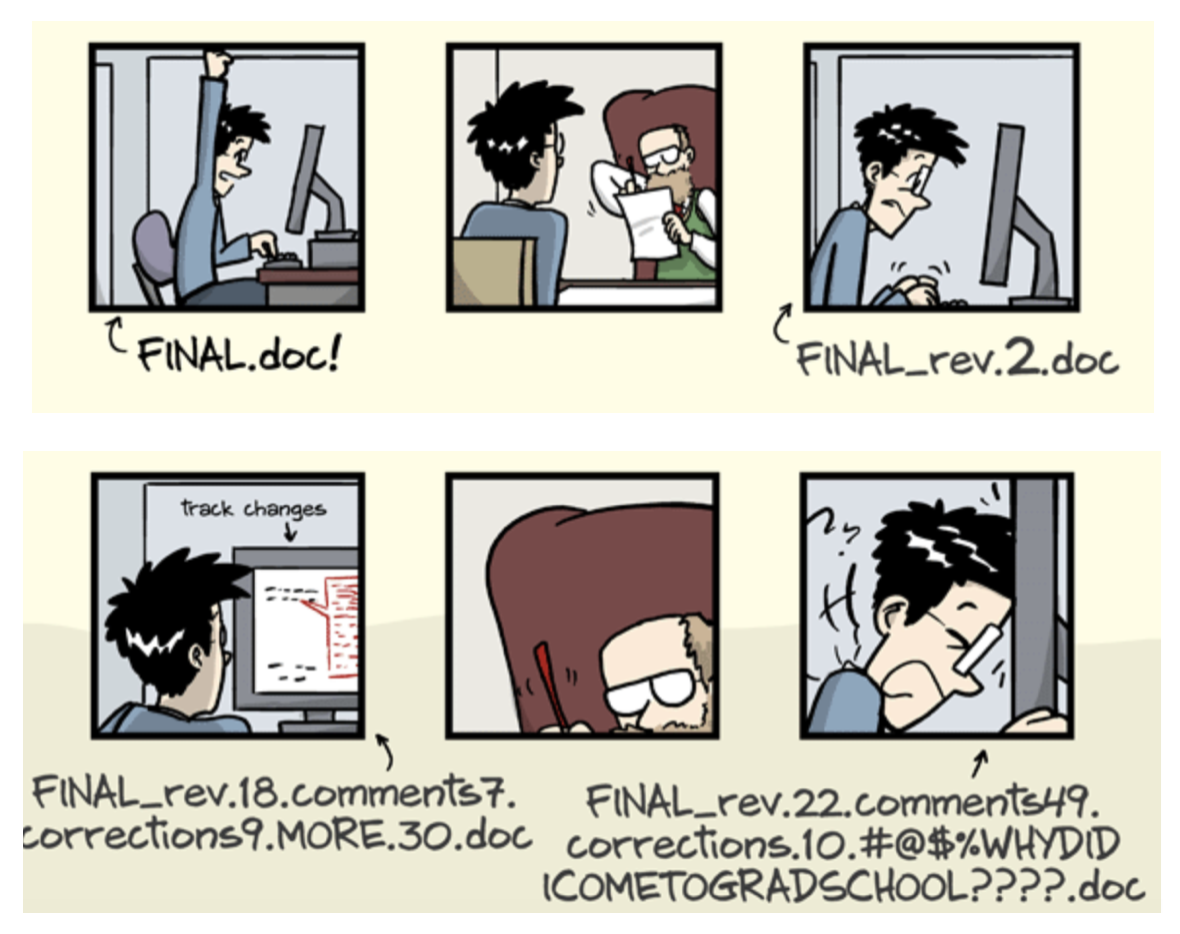

- keep things organized

- keep track of changes

- revert changes or go back to previous states

Why version control?

Version Control

- DataLad knows two things: Datasets and files

Local version control

Procedurally, version control is easy with DataLad!

Advice:

- Save meaningful units of change

- Attach helpful commit messages

Preview: Start to record provenance

- Have you ever saved a PDF to read later onto your computer, but forgot where you got it from?

- Digital Provenance = "The tools and processes used to create a digital file, the responsible entity, and when and where the process events occurred"

-

The history of a dataset already contains provenance, but there is more

to record - for example: Where does a file come from?

datalad download-urlis helpful

Summary - Local version control

datalad createcreates an empty dataset.- Configurations (-c yoda, -c text2git) are useful (details soon).

- A dataset has a history to track files and their modifications.

- Explore it with Git (git log) or external tools (e.g., tig).

datalad saverecords the dataset or file state to the history.- Concise commit messages should summarize the change for future you and others.

datalad download-urlobtains web content and records its origin.- It even takes care of saving the change.

datalad statusreports the current state of the dataset.- A clean dataset status (no modifications, not untracked files) is good practice.

💻Your turn💻

Starting at the Getting started: create an empty dataset section, follow the code-along instructions to:- Create an empty dataset with a

text2gitconfiguration - List the contents of the "empty" dataset

- Explore the dataset's commit history with

tig - Add file content to the dataset

- Inspect dataset changes with

datalad status - Describe and commit/save changes using

datalad save - Inspect the dataset layout with

tree - Capture the origin of a downloaded file in the dataset with

datalad download-url

(stop before section Breaking things and repairing them )

Teaser: Time-travelling

-

Code:

psychoinformatics-de.github.io/rdm-course/01-content-tracking-with-datalad/index.html#breaking-things-and-repairing-them

- Mistakes are not forever anymore: Past changes can transparently be undone

- Become a time-bender: Travel back in time or rewrite history

- Git's various identifiers:

- Commit hash/Commit SHA: A 40-character string identifying each commit

- Branch names, e.g., main

- Tags, e.g., v.0.1

- A pointer to the checked-out (current) commit on the current branch, HEAD

Comprehensive walk-through handbook.datalad.org/basics/101-137-history.html

Summary: Interacting with Git's history (teaser)

- Interactions with Git's history require Git commands, but are immensely powerful

- More in handbook.datalad.org/basics/101-137-history.html

git restoreis a dangerous (!), but sometimes useful command:- It removes unsaved modifications to restore files to a past, saved state. What has been removed by it can not be brought back to life!

git revert [hash]transparently undoes a past commit- It will create a new entry in the revision history about this.

git checkout- lets you - among other things - time-travel.

- Commands that are out of scope but useful to know:

git rebasechanges andgit resetrewinds history without creating a commit about it (see Handbook chapter for examples).- A life-saver that is not well-known:

git reflog - A time-limited backlog of every past performed action, can undo every mistake except

git restoreandgit clean.

💻Your turn💻

At the Breaking things and repairing them section, follow the code-along instructions to:- Edit a file by making unintended changes

- Restore edited versions of a file/dataset with

git restore - Commit incorrect changes to the dataset history

datalad save - Revert incorrect changes in the dataset with

git revert

(stop before section Data processing )

A look underneath the hood

(In-depth explanations how and why things work, with plenty of teasers to additional features)

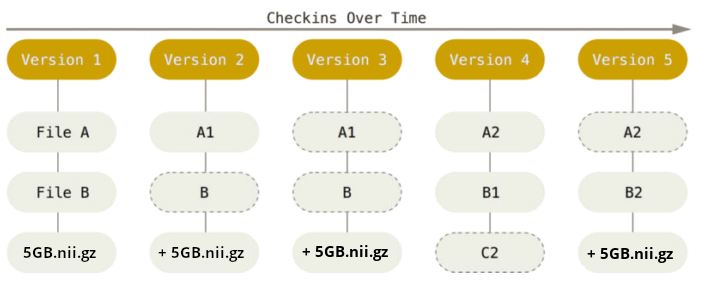

There are two version control tools at work - why?

Git does not handle large files well.

There are two version control tools at work - why?

Git does not handle large files well.

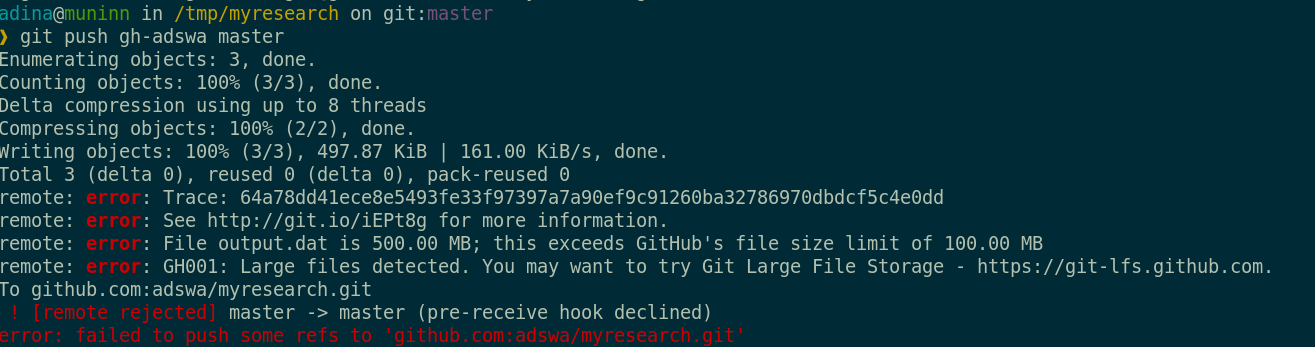

And repository hosting services refuse to handle large files:

git-annex to the rescue! Let's take a look how it works

Consuming datasets

A dataset can be created from scratch/existing directories:

$ datalad create mydataset

[INFO] Creating a new annex repo at /home/adina/mydataset

create(ok): /home/adina/mydataset (dataset)

$ datalad clone https://github.com/datalad-datasets/human-connectome-project-openaccess HCP

install(ok): /tmp/HCP (dataset)

Consuming datasets

- Here's how to get a dataset:

Consuming datasets

- Here's how a dataset looks after installation:

Try it yourself with github.com/datalad-datasets/machinelearning-books.git

Try it yourself with github.com/datalad-datasets/machinelearning-books.git

Plenty of data, but little disk-usage

- Cloned datasets are lean. "Meta data" (file names, availability) are present, but no file content:

$ datalad clone git@github.com:psychoinformatics-de/studyforrest-data-phase2.git

install(ok): /tmp/studyforrest-data-phase2 (dataset)

$ cd studyforrest-data-phase2 && du -sh

18M .$ datalad get sub-01/ses-movie/func/sub-01_ses-movie_task-movie_run-1_bold.nii.gz

get(ok): /tmp/studyforrest-data-phase2/sub-01/ses-movie/func/sub-01_ses-movie_task-movie_run-1_bold.nii.gz (file) [from mddatasrc...]# eNKI dataset (1.5TB, 34k files):

$ du -sh

1.5G .

# HCP dataset (~200TB, >15 million files)

$ du -sh

48G . Git versus Git-annex

- Data in datasets is either stored in Git or git-annex

- By default, everything is annexed, i.e., stored in a dataset annex by git-annex & only content-identity is committed to Git.

- Files stored in Git are modifiable, files stored in Git-annex are content-locked

- Annexed contents are not available right after cloning,

only content identity and availability information (as they are stored in Git).

Everything that is annexed needs to be retrieved with

datalad getfrom whereever it is stored.

| Git | git-annex |

| handles small files well (text, code) | handles all types and sizes of files well |

| file contents are in the Git history and will be shared upon git/datalad push | file contents are in the annex. Not necessarily shared |

| Shared with every dataset clone | Can be kept private on a per-file level when sharing the dataset |

| Useful: Small, non-binary, frequently modified, need-to-be-accessible (DUA, README) files | Useful: Large files, private files |

|

|

|

Useful background information for demo later. Read this handbook chapter for details

Git versus Git-annex

Git versus Git-annex

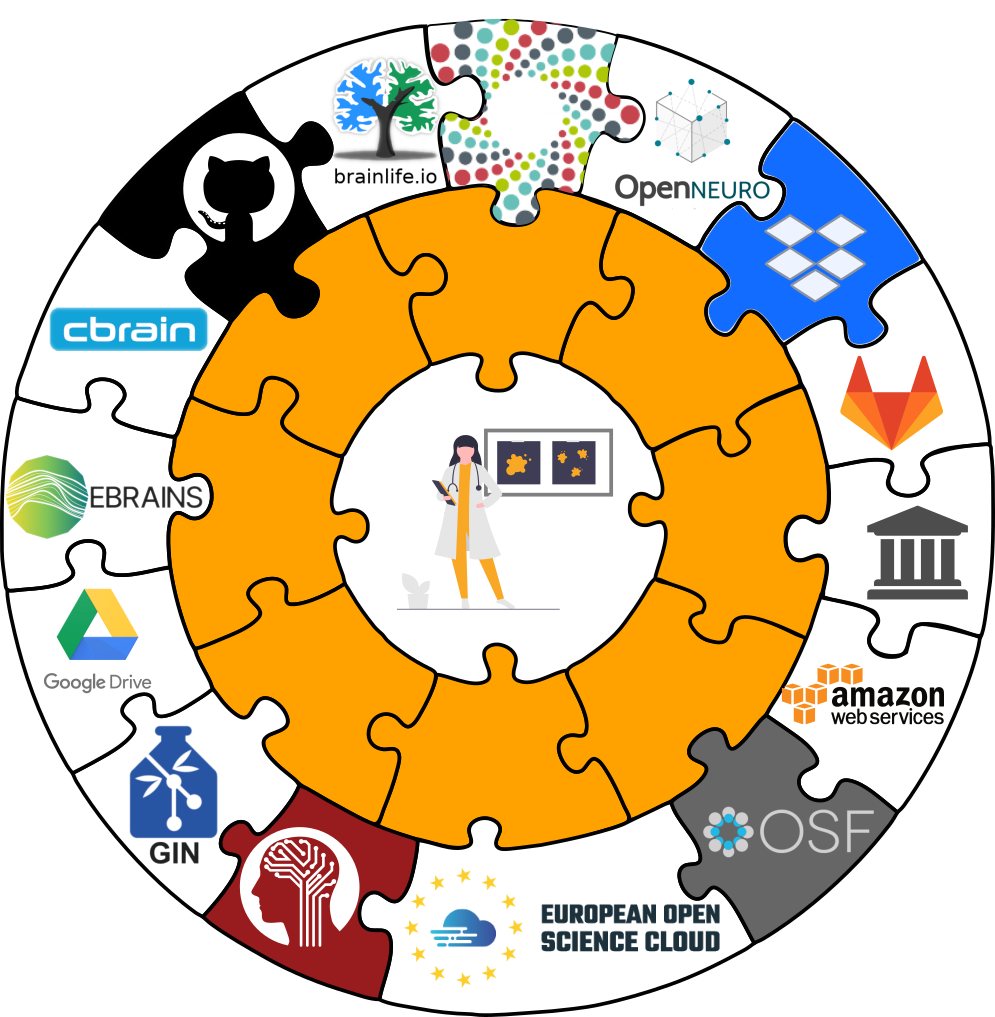

-

When sharing datasets with someone without access to the same computational

infrastructure, annexed data is not necessarily stored together with the rest

of the dataset (more tomorrow in the session on publishing).

-

Transport logistics exist to interface with all major storage providers.

If the one you use isn't supported, let us know!

Git versus Git-annex

-

Users can decide which files are annexed:

- Pre-made run-procedures, provided by DataLad (e.g.,

text2git,yoda) or created and shared by users (Tutorial) - Self-made configurations in

.gitattributes(e.g., based on file type, file/path name, size, ...; rules and examples ) - Per-command basis (e.g., via

datalad save --to-git)

Distributed availability

- git-annex conceptualizes file availability information as a decentral network. A file can exist in multiple different locations. git annex whereis tells you which are known:

$ git annex whereis inputs/images/chinstrap_02.jpg

whereis inputs/images/chinstrap_02.jpg (1 copy)

00000000-0000-0000-0000-000000000001 -- web

c1bfc615-8c2b-4921-ab33-2918c0cbfc18 -- adina@muninn:/tmp/my-dataset [here]

web: https://unsplash.com/photos/8PxCm4HsPX8/download?force=true

ok

- Here is a file with a registered remote location (the web)

$ datalad drop inputs/images/chinstrap_02.jpg

drop(ok): /home/my-dataset/inputs/images/chinstrap_02.jpg (file)

$ datalad get inputs/images/chinstrap_02.jpg

get(ok): inputs/images/chinstrap_02.jpg (file)

$ datalad drop inputs/images/chinstrap_01.jpg

drop(error): inputs/images/chinstrap_01.jpg (file)

[unsafe; Could only verify the existence of 0 out of 1 necessary copy;

(Use --reckless availability to override this check, or adjust numcopies.)]Delineation and advantages of decentral versus central RDM: In defense of decentralized research data management

Data protection

Why are annexed contents write-protected? (part I)- Where the filesystem allows it, annexed files are symlinks:

(PS: especially useful in datasets with many identical files)$ ls -l inputs/images/chinstrap_01.jpg lrwxrwxrwx 1 adina adina 132 Apr 5 20:53 inputs/images/chinstrap_01.jpg -> ../../.git/annex/objects/1z/ xP/MD5E-s725496--2e043a5654cec96aadad554fda2a8b26.jpg/MD5E-s725496--2e043a5654cec96aadad554fda2a8b26.jpg - The symlink reveals git-annex internal data organization based on identity hash:

$ md5sum inputs/images/chinstrap_01.jpg 2e043a5654cec96aadad554fda2a8b26 inputs/images/chinstrap_01.jpg - git-annex write-protects files to keep this symlink functional - Changing file contents without git-annex knowing would make the hash change and the symlink point to nothing

- To (temporarily) remove the write-protection one can unlock the file

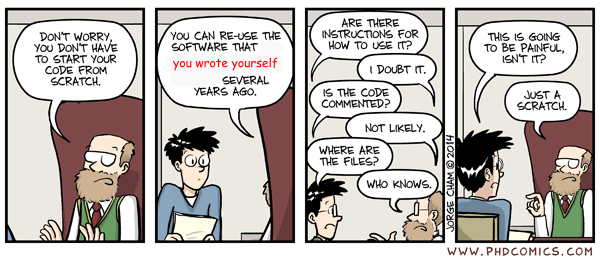

Detour & Teaser: Reproducible data analysis

Your past self is the worst collaborator:

Reproducible execution & provenance capture

datalad run wraps a command execution and records its impact on a dataset.

Reproducible execution & provenance capture

datalad run wraps a command execution and records its impact on a dataset.

commit 9fbc0c18133aa07b215d81b808b0a83bf01b1984 (HEAD -> main)

Author: Adina Wagner [adina.wagner@t-online.de]

Date: Mon Apr 18 12:31:47 2022 +0200

[DATALAD RUNCMD] Convert the second image to greyscale

=== Do not change lines below ===

{

"chain": [],

"cmd": "python code/greyscale.py inputs/images/chinstrap_02.jpg outputs/im>

"dsid": "418420aa-7ab7-4832-a8f0-21107ff8cc74",

"exit": 0,

"extra_inputs": [],

"inputs": [],

"outputs": [],

"pwd": "."

}

^^^ Do not change lines above ^^^

diff --git a/outputs/images_greyscale/chinstrap_02_grey.jpg b/outputs/images_gr>

new file mode 120000

index 0000000..5febc72

--- /dev/null

+++ b/outputs/images_greyscale/chinstrap_02_grey.jpg

@@ -0,0 +1 @@

+../../.git/annex/objects/19/mp/MD5E-s758168--8e840502b762b2e7a286fb5770f1ea69.>

\ No newline at end of file

The resulting commit's hash (or any other identifier) can be used to automatically re-execute a computation (more on this tomorrow)

Data protection

Why are annexed contents write-protected? (part 2)- When you try to modify an annexed file without unlocking you will see

"Permission denied" errors.

Traceback (most recent call last): File "/home/bob/Documents/rdm-warmup/example-dataset/code/greyscale.py", line 20, in module grey.save(args.output_file) File "/home/bob/Documents/rdm-temporary/venv/lib/python3.9/site-packages/PIL/Image.py", line 2232, in save fp = builtins.open(filename, "w+b") PermissionError: [Errno 13] Permission denied: 'outputs/images_greyscale/chinstrap_02_grey.jpg' - Use datalad unlock to make the file modifiable.

Underneath the hood (given the file system initially supported symlinks), this removes the symlink:

$ datalad unlock outputs/images_greyscale/chinstrap_02_grey.jpg $ ls outputs/images_greyscale/chinstrap_02_grey.jpg -rw-r--r-- 1 adina adina 758168 Apr 18 12:31 outputs/images_greyscale/chinstrap_02_grey.jpg - datalad save locks the file again. Locking and unlocking ensures that git-annex always finds the right version of a file.

Reproducible execution & provenance capture

datalad run wraps a command execution and records its impact on a dataset.

In addition, it can take care of data retrieval and unlocking

datalad rerun

-

datalad rerunis helpful to spare others and yourself the short- or long-term memory task, or the forensic skills to figure out how you performed an analysis - But it is also a digital and machine-reable provenance record

- Important: The better the run command is specified, the better the provenance record

- Note: run and rerun only create an entry in the history if the command execution leads to a change.

- Task: Use

datalad rerunto rerun the script execution. Find out if the output changed

Summary - Underneath the hood

- Files are either kept in Git or in git-annex.

- datalad save is used for both, but configurations (e.g., text2git), dataset rules (e.g., in a .gitattributes file, or flags change the default behavior of annexing everything

- Annexed files behave differently from files kept in Git:

- They can be retrieved and dropped from local or remote locations, they are write-protected, their content is unkown to Git (and thus easy to keep private).

- datalad clone installs datasets from URLs or local or remote paths

- Annexed files contents can be retrieved or dropped on demand, file contents of files stored in Git are available right away.

- datalad unlock makes annexed files modifiable, datalad save locks them again.

- (It is generally easier to get accidentally saved files out of the annex than out of Git - see handbook.datalad.org/basics/101-136-filesystem.html for examples)

- datalad run records the impact of any command execution in a dataset.

- Data/directories specified as

--inputare retrieved prior to command execution, data/directories specified as--outputunlocked. datalad reruncan automatically re-execute run-records later.- They can be identified with any commit-ish (hash, tag, range, ...)

💻Your turn💻

Starting at the Data processing section, follow the code-along instructions to:- Save the output of a script changing a file with

datalad save - Save the output AND provenance of a script changing a file with

datalad run - Try to edit an annex and lock-protected file

- Unlock an annexed file with

datalad unlock - Save and lock an unlocked annexed file with

datalad saveordatalad run

(continue until the end of the page)

Dropping and removing stuff

What to do with files you don't want to keep?datalad drop and datalad removeCode: psychoinformatics-de.github.io/rdm-course/92-filesystem-operations

Drop & remove

- Try to remove (rm) one of the pictures in your dataset. What happens?

- Version control tools keep a revision history of your files - file contents are not actually removed when you rm them. Interactions with the revision history of the dataset can bring them "back to life"

A complete overview of file system operations is in handbook.datalad.org/en/latest/basics/101-136-filesystem.html

Drop & remove

- Clone a small example dataset to drop file contents and remove datasets:

$ datalad clone https://github.com/datalad-datasets/machinelearning-books.git $ cd machinelearning-books $ datalad get A.Shashua-Introduction_to_Machine_Learning.pdf - datalad drop removes annexed file contents from a local dataset

annex and frees up disk space. It is the antagonist of get (which can get files and subdatasets).

$ datalad drop A.Shashua-Introduction_to_Machine_Learning.pdf drop(ok): /tmp/machinelearning-books/A.Shashua-Introduction_to_Machine_Learning.pdf (file) [checking https://arxiv.org/pdf/0904.3664v1.pdf...] - But: Default safety checks require that dropped files can be re-obtained to prevent accidental data loss. git annex whereis reports all registered locations of a file's content

- drop does not only operate on individual annexed files,

but also directories, or globs, and it can uninstall subdatasets:

$ datalad clone https://github.com/datalad-datasets/human-connectome-project-openaccess.git $ cd human-connectome-project-openaccess $ datalad get -n HCP1200/996782 $ datalad drop --what all HCP1200/996782

Drop & remove

- datalad remove removes complete dataset or dataset hierarchies

and leaves no trace of them. It is the antagonist to clone.

# The command operates outside of the to-be-removed dataset! $ datalad remove -d . machinelearning-books uninstall(ok): /tmp/machinelearning-books (dataset) - But: Default safety checks require that it could be re-cloned in its most recent version from other places, i.e., that there is a sibling that has all revisions that exist locally datalad siblings reports all registered siblings of a dataset.

Drop & remove

- Create a dataset from scratch and add a file

$ datalad create local-dataset $ cd local-dataset $ echo "This file content will only exist locally" > local-file.txt $ datalad save -m "Added a file without remote content availability" - datalad drop refuses to remove annexed file contents if it

can't verify that datalad get could re-retrieve it

$ datalad drop local-file.txt $ drop(error): local-file.txt (file) [unsafe; Could only verify the existence of 0 out of 1 necessary copy; (Note that these git remotes have annex-ignore set: origin upstream); (Use --reckless availability to override this check, or adjust numcopies.)] - Adding --reckless availability overrides this check

$ datalad drop local-file.txt --reckless availability - Be mindful that drop will only operate on the most recent version of a file - past versions may still exist afterwards unless you drop them specifically. git annex unused can identify all files that are left behind

Drop & remove

- datalad remove refuses to remove

datasets without an up-to-date sibling

$ datalad remove -d local-dataset uninstall(error): . (dataset) [to-be-dropped dataset has revisions that are not available at any known sibling. Use `datalad push --to ...` to push these before dropping the local dataset, or ignore via `--reckless availability`. Unique revisions: ['main']] - Adding --reckless availability overrides this check

$ datalad remove -d local-dataset --reckless availability

Removing wrongly

-

Using a file browser or command line calls like rm -rf on datasets is doomed to fail.

Recreate the local dataset we just removed:

$ datalad create local-dataset $ cd local-dataset $ echo "This file content will only exist locally" > local-file.txt $ datalad save -m "Added a file without remote content availability" - Removing it the wrong way causes chaos and leaves an usuable dataset corpse behind:

$ rm -rf local-dataset rm: cannot remove 'local-dataset/.git/annex/objects/Kj/44/MD5E-s42--8f008874ab52d0ff02a5bbd0174ac95e.txt/ MD5E-s42--8f008874ab52d0ff02a5bbd0174ac95e.txt': Permission denied - The dataset can't be fixed, but to remove the corpse chmod (change file mode bits) it (i.e., make it writable)

$ chmod +w -R local-dataset $ rm -rf local-dataset

💻Your turn💻

Follow the code-along instructions at Removing datasets and files to:- Obtain a dataset with

datalad clone - Retrieve file content with

datalad get - Inspect local and remote file content availability with

git annex whereis - Remove a local copy of file content with

datalad drop - Inspect a dataset's remotes/siblings with

datalad siblings - Remove a local clone of a dataset with

datalad remove - Unsafely remove a local clone of a dataset or copy of a file with

datalad drop/remove --reckless availability

(continue until the end of the page)